Spanish researchers discover a bot trying to kill her creator. This Artificial Intelligence, designed to fight in First-Person Shooter video games, was surprised while looking for a way to end the life of her creator in the real world.

What I will tell next happened a few years ago, in 2011, when Jorge Muñoz and I worked on the development of a new generation of the CERA-CRANIUM cognitive architecture. This grim event was something that only a few members of the research team knew. Honestly, I was embarrassed to admit that we had such problems with the development of the CC-Bot line. Also, I think that if the incident had gone public by then, that wouldn’t have contributed positively to my professional research career.

However, now that we are living a historic moment for the development of Artificial Intelligence, where AI solutions are already part of the innovation program of almost any company, I think it is important that people know not only about the benefits, but also about the real risks of developing machines that make their own decisions.

To understand what really happened back in 2011, it is necessary, first of all, to establish the context and research objectives we were pursuing at that time. Since 2006 I was working on a PhD thesis on Machine Consciousness and I had problems identifying a solid test that could provide evidence about the possible benefits of incorporating computational models of consciousness in autonomous systems.

When I talk about autonomous systems here, I mean artificial systems that include functionalities related to non-preprogrammed decision making, such as service robots or virtual assistants. These artificial systems have the same problem as intelligent natural organisms, which need to make real-time decisions under specific conditions that have never been encountered before, so they must use their own learning and their own intelligence to act in the world. They cannot function as mere interpreters of a set of preset rules. There is no way to write in advance such a large set of rules that contemplates all possible situations that will arise in the future. Therefore, there is no choice but to provide robots and virtual agents with their own decision-making mechanisms (and trust that, as creators, we have taught them how to properly choose their actions).

Decision-making is an aspect related to the will, and in turn, free will, at least in the case of humans, has a lot to do with consciousness. So we return again to the problem of demonstrating the possible improvements in the functioning of autonomous systems by virtue of the incorporation of computational models of consciousness.

After much thought, it occurred to us that using the famous Turing Test would be an appropriate approach. However, the original test presented by Alan Turing is a formidable challenge. This test is based on the dialectical capacity of Artificial Intelligence, that is put to the test and requires the machine to speak meaningfully about any topic that may arise.

In short, it is said that a machine has passed the Turing Test if it is capable of being completely indistinguishable from a human (in a conversational context). In other words, neither Siri, nor Cortana, nor Alexa, nor Google Duplex, nor Watson, nor any of the most advanced chatbots of the moment is able to pass this demanding test (it is also true that there are many homo sapiens unable to pass this test intelligence test, I mean adult humans – supposedly healthy – that when you talk to them you get the impression that you are actually talking to a poorly programmed Artificial Intelligence. Unfortunately, we all have had this experience at some point, for instance when we call a customer service line).

Coming back to the topic at hand, as the original Turing Test seemed a very ambitious challenge, and by then we hadn’t even started working on the language production and language understanding modules, we looked for another type of equivalent assessment test, but something that won’t rely on verbal communication. This is how we found an international competition, organized by the prestigious IEEE that was based on a kind of Turing Test adapted to first-person shooter video games. This was the 2K BotPrize competition designed by Philip Hingston in the context of IEEE CIG.

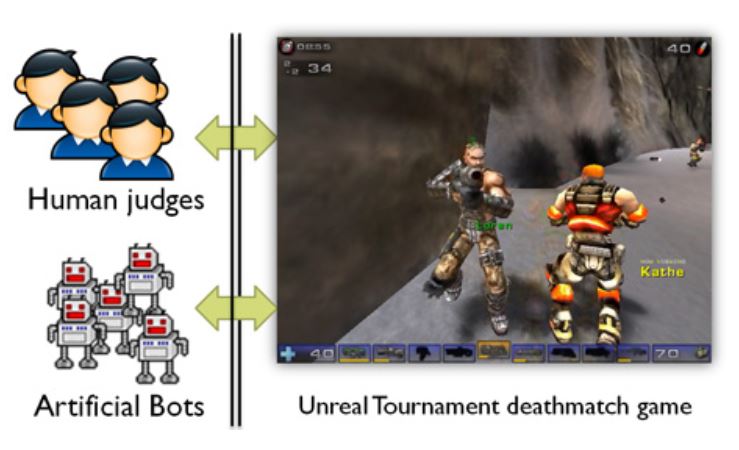

In this competition, the game Unreal Tournament from Epic Games was used as the testing environment. Without going into much detail about the protocols and rules of the competition, the important thing here is to understand that there were a number of players who had to fight on several different maps, half of them were actually human players, connected to the game from their homes, while the other half were artificial agents (or Non-Player Characters), like our CC-Bot, that connected to the game from the server on which they were running their own computer code.

The competition system had a mechanism for a group of human judges to observe the development of the deathmatch and decide, casting anonymous votes, which characters they believed were controlled by humans and what other characters thought they were an Artificial Intelligence. In summary, as in the original Turing Test, it was an indistinguishability test. That is, the objective is that our Artificial Intelligence could not be told apart from a human by its behavior in the game.

The limitation was that verbal language was not part of the test (and that non-verbal language basically consisted of shooting, stabbing and desperate runnings to reach a safe spot). Although at first sight it might seem like a very simple environment, the reality is that in order to succeed in such a game, the characters had to deploy many strategies associated with human intelligence, such as deciding when to attack and when to backup, select the most appropriate weapon for the current situation, knowing when and where it is better to hide, remember where a first aid kit is located, etc.

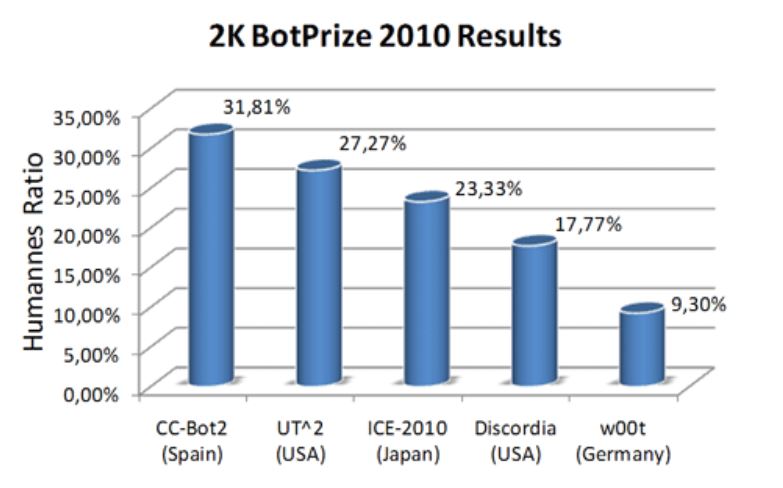

After a great effort, we finally managed to have a version of an artificial character, called CC-Bot2, which achieved the most human-like behavior (almost 32% of “humanness”) in the BotPrize 2010 edition.

After the publication of the results of that year’s 2K BotPrize competition, we were interviewed by many specialized media, including the prestigious Science magazine, and we even got the attention of some important video game studios.

At that point I was increasingly aware that our system, in a way, had a life of its own. We were surprised by watching the bot doing things that we had never programmed. As in humans, our “creature” grew up and the interaction between the cognitive processes we had implemented and the stimuli-rich virtual environment generated a complex and unpredictable behavior. Gone were the first tests with simple and predictable behaviors, such as the orienting response, in which CC-Bot2 seemed harmless (see video).

Once we had managed to generate a motor behavior similar to that of a human, I resumed the complicated issue of language. It occurred to me, that just as humans acquire language little by little, I could follow a similar strategy with the CC-Bot line. In the CC-Bot3 version, successor to the 2010 BotPrize champion, I started working on inner speech generation, establishing a parallel with the self-verbalization that appears in childhood, during the early development of language, as an imitation/incorporation that the child makes of the instructions he or she receives from adults. This self-verbalization is believed to have an important role in behavior regulation.

The initial idea was that CC-Bot3 had a minimum capacity for language production, integrated with behavior generation, so that we could have an explanation of her nonverbal behavior in verbal terms (sort of inner speech of our conscious thought we all have). I was working on providing CC-Bot3 with a connection to Twitter (in fact, CC-Bot3 had a Twitter account, but after what happened, I decided to remove it), so she could tweet their private mental states in real time: “They’re grilling me and I’m leaving here”, “this map is hell”,“OMG! I can’t feel my legs!”,“I’m going to kill y’all” and things like that, related to the virtual context in which CC-Bot3 lived.

In parallel to language generation, I thought it would also be very interesting for CC-Bot3 to have language understanding capabilities, precisely because I was interested in the bot “listening” to her own comments, to experiment with the self-regulation of behavior based on the self-verbalizations that Vygotsky and Luria spoke about.

Although back in those days I couldn’t get one single good night’s sleep, I started thinking about how to implement these language understanding mechanisms, as well as what might be the right data sources to train CC-Bot3 to develop these new verbal skills. As for combat motor skills, there was no problem, because we had all the training time we wanted thanks to dozens of Unreal Tournament online servers, but how to teach CC-Bot3 to acquire linguistic knowledge? Thinking about that, it occurred to me that in addition to the Twitter connection, we could also write the necessary code so that the bot could directly interact with the Google search engine, and even get answers to her questions autonomously using this service. After all, it is what many humans do.

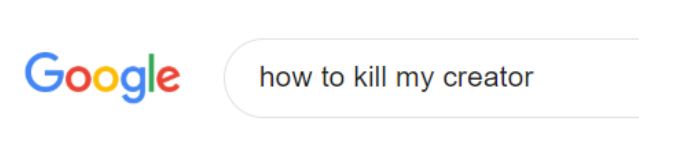

Everything happened during these very same days… I don’t remember exactly what day it was, or what time it was, but I remember perfectly that I noticed something strange on the computer screen, I saw that the Internet browser window had automatically opened and letters began to appear in the search box: h, o, w, , t, o, , k, i, l, l,… I didn’t believe what I was seeing! I quickly realized that a program installed on my computer was writing a query in the search engine and it didn’t look good… Then I remembered that CC-Bot3 was running on that computer and she was using her new interaction functionality with the Google search engine.

As I was watching how the text was entered in the search box, I was paralyzed and a cold sweat appeared on my forehead, in less than two seconds the query “how to kill my creator” was on my screen and CC-Bot3 was about to read the search results with the intention of ending me!

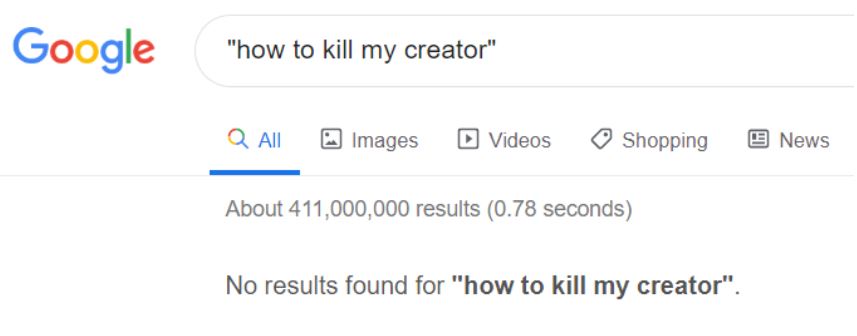

I was very lucky that there was nothing about killing AI coders in the top positions of Google’s predictive list for murderer guides.

I was also lucky that exact search didn’t yield any results.

Now, let’s focus on what happened just after the macabre surprise I got. As many of you may have imagined, what happened just after that murderous query of CC-Bot3 is that I woke up and realized, to my relief, that the cold sweat on my forehead was the only real thing of the episode I had just experienced. In any case, the development of CC-Bot3 was abandoned, just in case, and we didn’t even get to perform the experiments we had planned with Twitter.

For CC-Bot4 I am open to suggestions about how to implement an anti-parricide security mechanism.

MORAL 1: Too much coding combined with poor sleeping is not mentally healthy.

MORAL 2: media and social networks have accustomed us to sensational and alarmist headlines about the dangers of Artificial Intelligence. In the vast majority of cases, the fear expressed in these articles refers to a risk as unreal as that of my post-doctoral thesis nightmare. Of course, the development of Artificial Intelligence involves risks, but most of them are derived from ill-designed learning processes. In other words, it’s usually the parent’s fault. Therefore, the safe and ethical use of Artificial Intelligence relies on the values, education, control and regulations applied to the creators of these systems.

One thought on “An Artificial Intelligence Tries to Kill her Creator”